What Is LLMInsight?

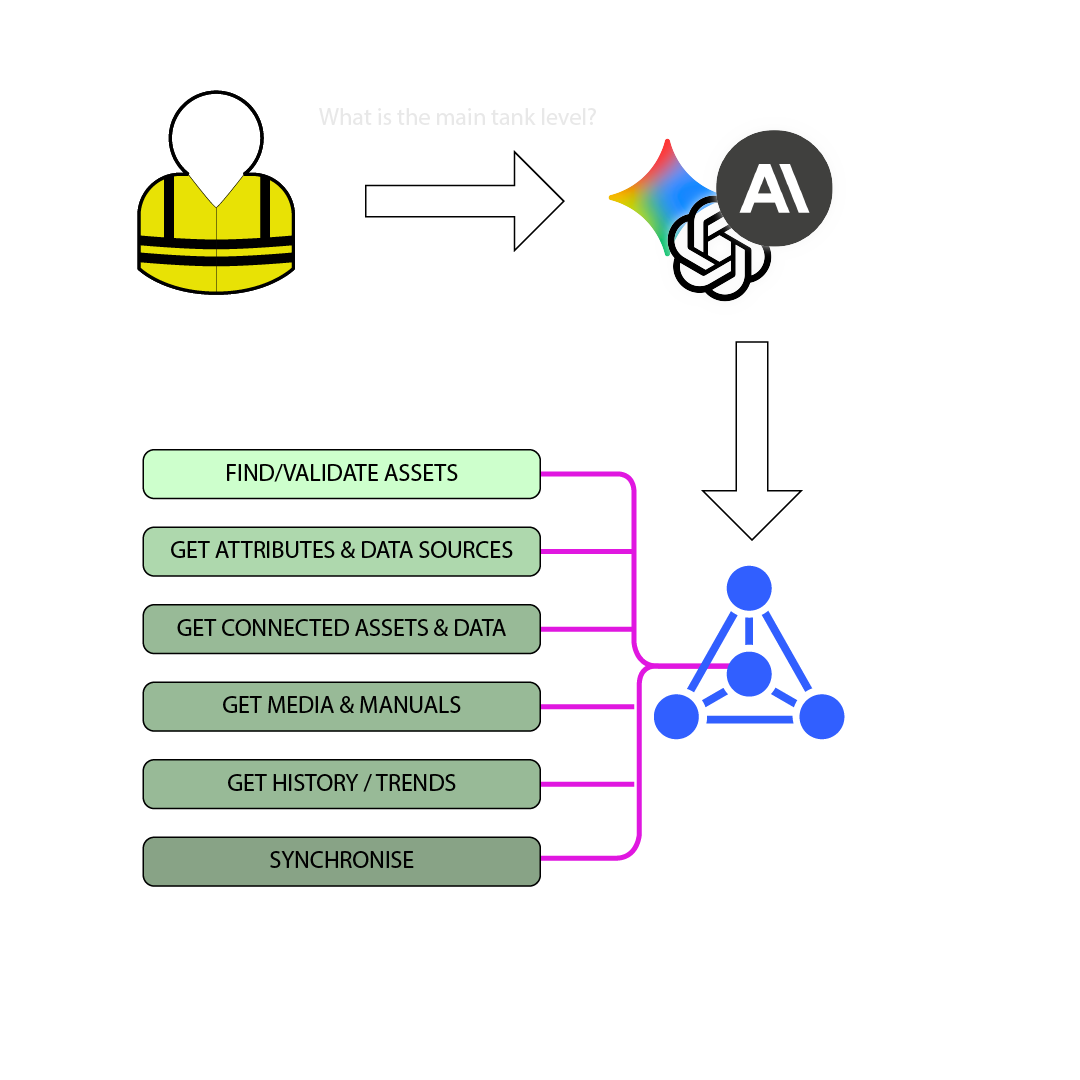

LLMInsight is designed to help guide people who are uncomfortable or unsure how to use LLM models in high-speed data environments.

Instead of offering an open chat – which can be awkward for people who can’t think of suitable prompts or might have accessibility issues – users choose between a pre-defined set of options to ask your chosen LLM model about your assets.

This list of prompts can be customised, but the basic addon comes with prompts like…

What is the current status of the asset?

Provides a simple, plain-English report about the current state of the asset, including insights about possible problems and if values appear reasonable.

How has the asset been performing recently?

Similar to the above option, but this time considers the status over time, giving a more in-depth look at what the asset has been doing over the last several hours.

What sensing could we add to this asset?

Helps to identify sensor blind-spots that might improve the capabilities of engineers and AI.

What alarms should this asset have?

Suggests alarms, their logic and the setpoints you might want to use to detect issues on the asset.

It makes it easy for staff to be guided through simple interactions with Large Language Models, and is compatible with a wide range of options, including ChatGPT, Gemini, Claude and self-hosted options for those who wish to keep their data secure.

For more conventional chat interaction with an LLM model, you can use the LLMTools addon and our ARDI Chat application.